6 Powerful Strategies to Align Stakeholders on Content Moderation Policies for Online Communities

Imagine trying to build a cohesive and interactive community, only to face constant disagreements on what content should be allowed. Sound familiar? This is a common challenge when aligning stakeholders on content moderation.

As a life coach, I’ve helped many professionals navigate these challenges. In my experience, aligning stakeholders on content moderation policies is crucial for effective digital platform governance and developing user-generated content policies.

In this article, you’ll discover strategies to create consistent online community guidelines and foster collaboration. We’ll cover multi-stakeholder approaches, transparency, and localized moderation, which are essential content moderation best practices. These stakeholder engagement strategies will help you balance free speech and moderation effectively.

Let’s dive into these community management tools and collaborative policy development techniques.

Why Content Moderation Conflicts Are So Challenging

Let’s face it, aligning stakeholders on content moderation is a minefield. Many clients initially struggle with defining what constitutes offensive content in their user-generated content policies.

The disagreements often stem from varying interpretations of online community guidelines and digital platform governance.

Balancing privacy concerns with community safety adds another layer of complexity to social media moderation. In my experience, people often find it hard to enforce rules without overstepping boundaries.

Regulatory compliance and online safety regulations further complicate matters. Keeping up with evolving laws while ensuring fair moderation is no small feat.

The pain is real. Misalignment can lead to chaos and mistrust among users in the absence of effective stakeholder engagement strategies.

You need a robust approach to navigate these challenges effectively, incorporating content moderation best practices and community management tools.

Roadmap to Align Stakeholders on Content Moderation Policies

Overcoming this challenge requires a few key steps. Here are the main areas to focus on to make progress in aligning stakeholders on content moderation.

- Establish a multi-stakeholder advisory board: Bring together community leaders, legal experts, and tech representatives to develop collaborative content moderation best practices.

- Conduct stakeholder workshops on key issues: Discuss and identify major content moderation concerns and online community guidelines.

- Create a shared policy development framework: Outline the policy development process collaboratively, incorporating stakeholder engagement strategies.

- Implement transparent reporting mechanisms: Develop and publish reports on moderation activities to enhance digital platform governance.

- Develop localized content moderation guidelines: Incorporate local cultural and legal contexts into user-generated content policies.

- Set up regular cross-functional review meetings: Review ongoing issues and trends in content moderation and social media moderation.

Let’s dive in to explore these strategies for aligning stakeholders on content moderation!

1: Establish a multi-stakeholder advisory board

Creating a multi-stakeholder advisory board is critical for aligning stakeholders on content moderation and fostering a balanced and inclusive content moderation policy.

Actionable Steps:

- Identify and invite key stakeholders: Gather community leaders, legal experts, and tech representatives to develop online community guidelines. Measure the number of stakeholders onboarded within three months.

- Develop a charter: Outline the board’s purpose, responsibilities, and decision-making processes for digital platform governance. Aim for charter completion and approval by all stakeholders within one month.

- Schedule regular meetings: Ensure continuous engagement and input on user-generated content policies. Track the frequency of meetings and attendance rates.

Key benefits of a multi-stakeholder advisory board include:

- Enhanced diversity in decision-making for social media moderation

- Improved accountability and transparency in content moderation best practices

- Greater community trust and engagement through stakeholder engagement strategies

Explanation: These steps ensure diverse perspectives are considered, fostering balanced and fair content moderation.

Regular engagement maintains momentum and trust among stakeholders involved in collaborative policy development.

For more insights on collaborative approaches, check out this article on content moderation.

Let’s move forward to the next crucial step in aligning stakeholders on content moderation.

2: Conduct stakeholder workshops on key issues

Organizing stakeholder workshops is crucial for aligning stakeholders on content moderation concerns and identifying key issues.

Actionable Steps:

- Organize workshops: Schedule sessions to discuss key content moderation best practices and issues. Measure the number of workshops conducted and participant feedback.

- Use interactive exercises: Facilitate exercises to foster collaboration and understanding of online community guidelines. Track the level of engagement and solutions generated.

- Summarize findings: Share workshop summaries with the broader community for transparency in digital platform governance. Measure the distribution and reach of these summaries.

Explanation: These stakeholder engagement strategies ensure active participation and diverse input, leading to more comprehensive user-generated content policies.

Regular workshops also build trust and foster a sense of community among stakeholders, supporting effective social media moderation.

For more detailed insights, refer to this study on conflict management in online communities.

Workshops pave the way for collaborative and effective content moderation, balancing free speech and moderation needs.

3: Create a shared policy development framework

Creating a shared policy development framework is essential for aligning stakeholders on content moderation and establishing consistent and fair online community guidelines.

Actionable Steps:

- Draft a collaborative framework: Outline the policy development process, including stakeholder engagement strategies. Aim to complete and endorse the framework within one month.

- Set up a digital platform: Facilitate collaborative policy drafting and feedback for user-generated content policies. Track user engagement and input using community management tools.

- Pilot test a specific policy: Use the framework to develop and refine a policy based on stakeholder feedback. Measure success and improvement areas identified during the pilot, focusing on content moderation best practices.

Explanation: These steps ensure that all stakeholders have a say in policy development, fostering buy-in and consistency in digital platform governance.

A collaborative platform and pilot testing enhance transparency and effectiveness in social media moderation. For more insights, refer to this article on content moderation that emphasizes collaboration and human rights.

Creating a shared framework sets the stage for a cohesive moderation strategy, balancing free speech and moderation while adhering to online safety regulations.

4: Implement transparent reporting mechanisms

Transparent reporting mechanisms are vital for building trust and accountability in content moderation practices, which is crucial when aligning stakeholders on content moderation.

Actionable Steps:

- Develop a comprehensive reporting system: Create a system that logs all content moderation actions and decisions, adhering to online community guidelines. Measure the system’s implementation and user satisfaction.

- Publish regular moderation reports: Share frequent reports detailing moderation activities and outcomes, focusing on digital platform governance. Track the frequency and comprehensiveness of these reports.

- Enable community feedback: Allow users to provide feedback on reports to improve transparency, employing stakeholder engagement strategies. Measure the volume and quality of feedback received.

Key elements of effective transparent reporting:

- Clear, accessible language

- Regular, consistent reporting schedule

- Detailed breakdowns of moderation actions

Explanation: These steps ensure transparency, fostering trust and accountability among stakeholders. Regular reporting and feedback help align content moderation with community expectations and user-generated content policies.

For a deeper understanding, refer to this article on content moderation software that highlights the importance of transparency.

Building transparent reporting mechanisms is a crucial step toward effective content moderation and aligning stakeholders on content moderation practices.

5: Develop localized content moderation guidelines

Developing localized content moderation guidelines ensures the policies reflect diverse cultural and legal contexts, which is crucial for aligning stakeholders on content moderation.

Actionable Steps:

- Research local contexts: Investigate local cultural norms and legal requirements to inform your guidelines. Measure the inclusion of diverse perspectives in online community guidelines.

- Collaborate with local experts: Engage with local experts to ensure guidelines are relevant and effective. Track the number of experts consulted and input integrated, enhancing stakeholder engagement strategies.

- Regularly update guidelines: Adapt guidelines to reflect evolving norms and regulations. Measure the frequency of updates and stakeholder approval in the context of digital platform governance.

Explanation: These steps matter because they help create fair and relevant content moderation policies. Localizing guidelines builds trust and ensures compliance with local laws, aligning stakeholders on content moderation practices.

For further insights, read this article on content moderation and human rights.

Localized guidelines pave the way for a more inclusive and effective moderation strategy, essential for user-generated content policies and social media moderation.

6: Set up regular cross-functional review meetings

Regular cross-functional review meetings are essential for continuously improving content moderation policies and aligning stakeholders on content moderation strategies.

Actionable Steps:

- Schedule bi-weekly meetings: Ensure representatives from legal, technical, and community management teams attend. Track attendance and meeting frequency to enhance stakeholder engagement strategies.

- Review ongoing issues: Address current and emerging trends in content moderation and digital platform governance. Measure the number of issues resolved.

- Document and share outcomes: Keep detailed records of meeting discussions and decisions on user-generated content policies. Ensure documents are accessible to all stakeholders.

Benefits of cross-functional review meetings for aligning stakeholders on content moderation:

- Improved coordination between teams for collaborative policy development

- Faster response to emerging issues in social media moderation

- Continuous refinement of moderation strategies and online community guidelines

Explanation: These steps foster collaboration and ensure that all perspectives are considered in decision-making, aligning with content moderation best practices.

Regular reviews help keep policies up-to-date and effective, balancing free speech and moderation. For more insights, read this article on balancing freedom and safety in content moderation.

These regular meetings will keep your moderation strategies relevant and effective, ensuring stakeholder alignment on content moderation and adherence to online safety regulations.

Partner with Alleo to Align Stakeholders on Content Moderation

We’ve explored the challenges of aligning stakeholders on content moderation policies and the steps to achieve it. But did you know you can work directly with Alleo to make this journey of aligning stakeholders on content moderation easier and faster?

Set up an Alleo account in minutes. Create a personalized content moderation plan tailored to your needs, incorporating content moderation best practices and online community guidelines.

Work with Alleo’s AI coach to overcome specific challenges like stakeholder engagement strategies and collaborative policy development for digital platform governance.

Alleo’s coach will follow up on your progress in implementing user-generated content policies. They’ll handle changes and keep you accountable via text and push notifications, acting as your community management tool.

You’ll receive tailored coaching support on social media moderation and online safety regulations, just like a human coach, but more affordable.

Ready to get started for free and begin aligning stakeholders on content moderation?

Let me show you how!

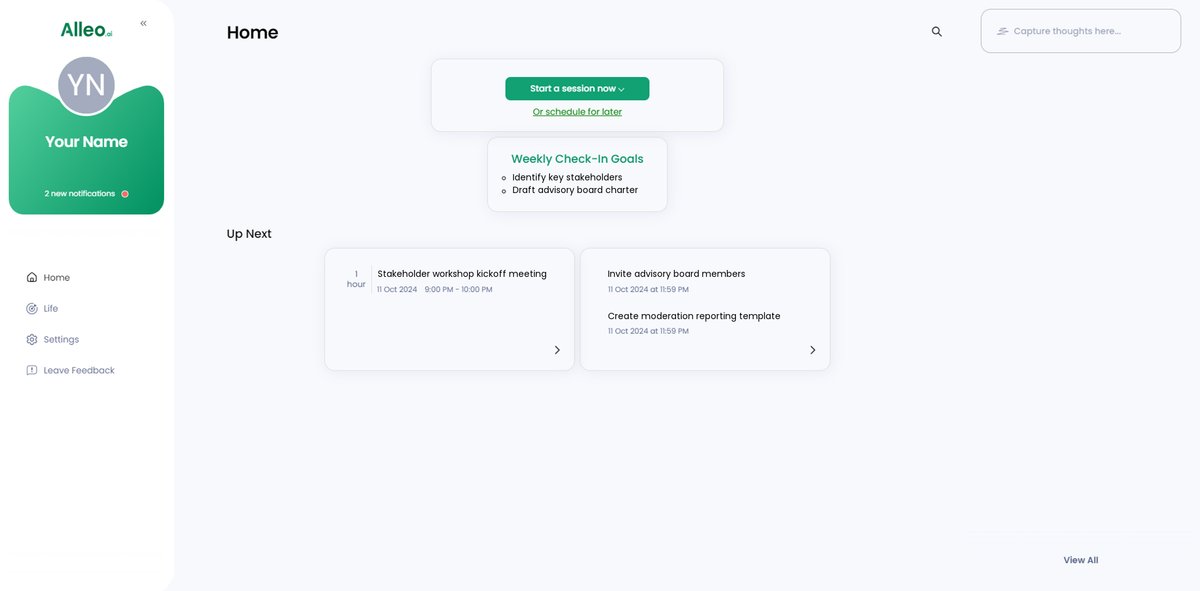

Step 1: Log In or Create Your Account

To begin aligning stakeholders on content moderation policies, log in to your existing Alleo account or create a new one in just a few clicks.

Step 2: Choose “Building better habits and routines”

Click on “Building better habits and routines” to develop consistent practices for content moderation, helping you create a more structured approach to aligning stakeholders and implementing effective policies.

Step 3: Select “Career” as Your Focus Area

Choose “Career” as your focus area in Alleo to get tailored guidance on content moderation strategies, stakeholder alignment, and professional development in the field of online community management.

Step 4: Starting a coaching session

Begin your journey with Alleo by scheduling an initial intake session, where you’ll collaborate with our AI coach to establish your personalized content moderation plan and set clear objectives for aligning stakeholders.

Step 5: Viewing and managing goals after the session

After your coaching session on aligning stakeholders for content moderation, check the Alleo app’s home page to view and manage the goals you discussed, ensuring you stay on track with implementing your new moderation strategies.

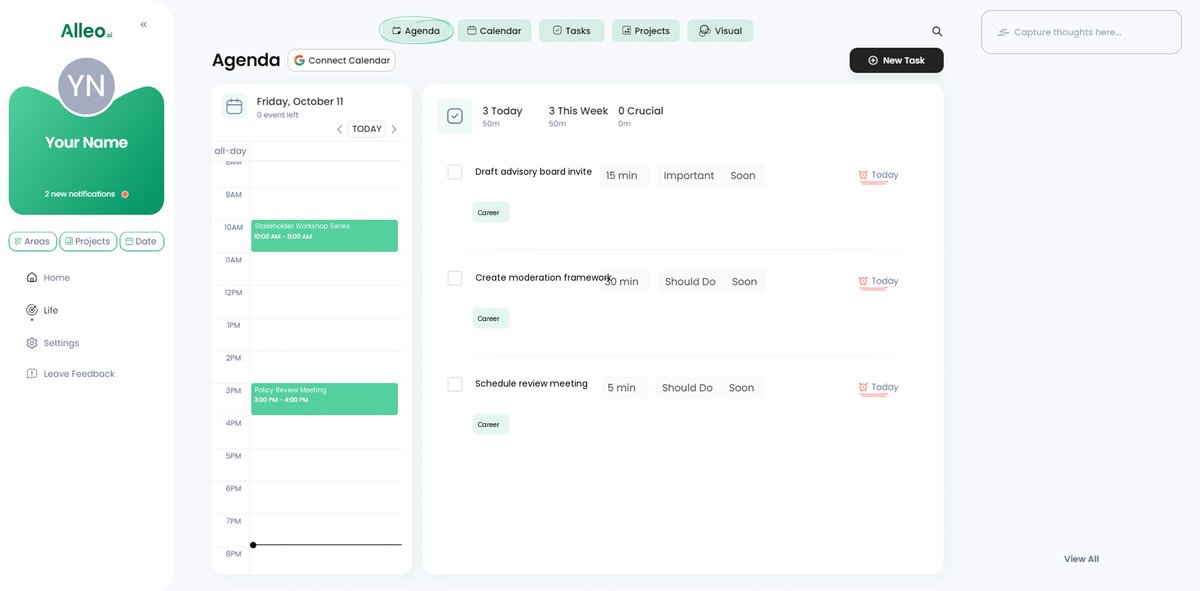

Step 6: Adding events to your calendar or app

Schedule your content moderation meetings and tasks in Alleo’s calendar, allowing you to track your progress in aligning stakeholders and implementing effective policies while receiving timely reminders and updates from your AI coach.

Bringing It All Together: Your Path to Stakeholder Alignment

Building a cohesive community can be challenging. Yet, with the right strategies, you can effectively align stakeholders on content moderation.

Remember, a multi-stakeholder approach fosters collaboration and transparency in digital platform governance. Regular workshops, transparent reporting, and localized online community guidelines can help you achieve this.

Don’t forget to set up cross-functional meetings. This ensures continuous improvement of your user-generated content policies and content moderation best practices.

I know this journey towards aligning stakeholders on content moderation isn’t easy. But you’re not alone.

Use Alleo to simplify the process of stakeholder engagement. With our community management tools and AI coach, you’ll align stakeholders and create a fair online community while balancing free speech and moderation.

Take the first step today. Start using Alleo for free and transform your social media moderation strategy.